Effective IAM for AWS

Simplify AWS IAM by using the best parts

Simplify AWS IAM by using the best parts

Less is more.

Simplify AWS security by using the best parts of IAM consistently. That's how you realize AWS IAM best practices at scale. You'll never be able to understand all of IAM. But you don't need to. AWS IAM's core capabilities can satisfy nearly all information security requirements. Focus your effort. Put AWS IAM's core capabilities into usable packages and patterns for developers. Then leverage those capabilities across your organization.

Your approach must work in the messy world of existing AWS deployments. The real world is full of AWS accounts that:

- grew organically, perhaps starting with an experiment and now running production

- operate software produced by internal and external teams with varying AWS skills

- use AWS managed policies

- use AWS managed data services

Many organizations' most critical AWS accounts look like a kitchen sink full of dirty dishes. You can't just start over. And the cleanup procedure isn't in the AWS IAM documentation.

How will you get this under control?

Adopt a security strategy that helps you clean up your accounts incrementally. Start by securing your most critical data with resource boundaries — a simple, scalable pattern.

Points of Leverage

Consistent abstractions provide leverage. When a feature works the same way across the environment, you can use that feature as a foundational building block in your security architecture. However, AWS is famously built by many independently operating teams that ship minimal services early. So most services are designed and behave a little bit differently than other services.

So what levers do you have to simplify access control?

First, focus access control efforts by organizing each use case's data into AWS accounts, then select the IAM context you will leverage in policies.

Second, use resource policies to control access to critical data within an account.

Third, use infrastructure code libraries and tools to simplify and scale IAM configuration.

Focus access control efforts

Organize and separate major use cases from each other using the approach described in 'Architect AWS organization for scale'.

If you have a single AWS account running multiple use cases that you need to separate (dev, stage, and prod for an app or multiple business units), then start planning a migration.

It's usually easiest to migrate non-production workloads to new accounts. Yes, migrating workloads out of an AWS account is often a major endeavor. But it regularly succeeds and simplifies security over the long run. Don't worry. After isolating use cases into dedicated AWS accounts, you can explicitly grant access between workloads using resource policies.

Tune access control focus by selecting a handful of policy condition context keys you will use to authorize requests consistently. AWS populates each API request with relevant context. The request context and policy condition keys enable more flexible policies. They also allow fine-grained control over requests originating both inside and outside your account or organization.

For example, instead of requiring an exact match of an AWS account id, IAM user arn, or role arn, you can use wildcards to match identities according to a convention, even when they don't exist yet.

{ "Condition": { "ArnLike": { "aws:PrincipalArn": [ "arn:aws:iam::111:user/admin", "arn:aws:iam::222:role/delivery", "arn:aws:iam::333:role/auditor-*" ] }, "StringEquals": { "aws:PrincipalOrgID": [ "o-abc1234" ] } }}Each of the condition operations (ArnLike, StringEquals) must be satisfied for the request to proceed. When a context key like aws:PrincipalArn specifies multiple values, then a request with any of those values satisfies the condition operation (details).

This Condition block explicitly identifies the admin user in account 111 and delivery role in account 222. It also identifies any roles whose name begins with auditor in account 333. Those principals' requests are only allowed if they belong to the AWS organization id o-abc1234.

These are the most useful global condition context keys:

aws:PrincipalArn(string): Compare the principal ARN that made the request with the ARN or pattern that you specify in the policy.aws:PrincipalIsAWSService(boolean): Check whether the call to your resource is being made directly by an AWS service principal.aws:PrincipalOrgId(string): Compare the requesting principal's AWS Organization id to an expected identifier in the policy.aws:PrincipalOrgPaths(string): Compare the requesting principal's AWS Organizations path to an expected path in the policy.aws:SourceVPC(string): Check whether the request came from a VPC specified in the policy.

These context keys enable you to identify AWS IAM and Service principals, what organization or OU they belong to, and if the request is coming from a particular network. These context keys solve most of the authentication problems policy authors face.

Let's discuss an approach that simplifies access control to data in a scalable way: Resource Policies.

Control access to data with resource policies

Use resource policies to control access to critical data within an account. Resource policies are ideal for establishing a resource boundary (pdf). A resource boundary controls how principals may use a specific resource and where those principals access data from. Because resource policies integrate directly into data access paths you can create resource boundaries easily.

There are few truly critical data sources in most organizations. Create resource policies that enforce least privilege access to those resources. This approach improves security posture quickly.

Most resources commonly shared between AWS accounts support resource policies: S3 buckets, SQS queues, Lambda functions, SSM parameters, and more. Critically, KMS encryption keys support resource policies.

You can leverage resource policies in two ways:

- Control access to data by applying resource policies directly to the resource

- Control access to data by encrypting it with a KMS key and controlling access to (just) the key

We used the first approach in 'Control access to any resource'. We protected a bucket containing critical data with a least privilege bucket policy. That S3 bucket resource policy:

- Allowed authorized principals to administer the bucket and access data

- Denied unauthorized principals access to the bucket

- Denied insecure access and storage by enforcing encryption in-transit and at-rest

But the second approach of encrypting data with KMS and controlling access to the key is more general, and provides you more leverage.

Secure data in AWS with Key Management Service (KMS)

This section explains core KMS concepts then shows how to encrypt your data to scale access management uniformly across disparate data services.

AWS Key Management Service (KMS) is a fully managed encryption API and key vault. KMS provides encryption operations like encrypt, decrypt, sign, and verify as APIs for use by your applications and by other AWS services. When you encrypt data in AWS data services like S3 or SQS, KMS is the service that safely performs those operations on your behalf. KMS integrates with more than 65 AWS services. Most engineers’ first encounter with KMS is to satisfy a requirement to encrypt data at rest, but you can take it much further.

Core KMS Concepts & Resources

Encryption keys are the foundational resource of KMS, and are called customer master keys (CMKs). A customer master key resource contains secret material used by encryption and decryption operations. A CMK also has metadata that identifies the key and a lifecycle to help you manage it.

KMS supports two types of customer master keys:

- AWS managed CMK: a key created automatically by AWS when you first create an encrypted resource in a service such as S3 or SQS. You can track the usage of an AWS managed CMK, but the lifecycle and permissions of the key are managed on your behalf.

- Customer managed CMK: a key created by an AWS customer for a specific use case. Customer managed CMKs give you full control over the lifecycle and permissions that determine who can use the key.

Some people are satisfied by encrypting data with an AWS managed CMK. An AWS managed CMK is used when you tell S3 or another data service to encrypt your data, but you don’t specify a key. These keys have aliases with friendly names like aws/s3. Indeed this does encrypt your data at rest. This encryption protects your data from such threats as an AWS datacenter employee ripping a drive out of a server and walking out the door. But this is probably not the information security threat you should worry about.

Encrypting data with AWS managed CMKs does not prevent a person or application with access to your account from reading that data using AWS APIs. You cannot control which IAM principals within the account use these default keys. AWS-managed key resource permissions are managed on your behalf. You cannot change those permissions. All the account's IAM principals can encrypt and decrypt data with AWS managed CMKs as long as the IAM principal can access the datastore. AWS accomplishes this with an uneditable key resource policy statement like:

{ "Sid": "Allow access through S3 for all principals in the account that are authorized to use S3", "Effect": "Allow", "Principal": { "AWS": "*" }, "Action": [ "kms:Encrypt", "kms:Decrypt", "kms:ReEncrypt*", "kms:GenerateDataKey*", "kms:DescribeKey" ], "Resource": "*", "Condition": { "StringEquals": { "kms:ViaService": "s3.us-east-1.amazonaws.com", "kms:CallerAccount": "111" } }}This statement from an aws/s3 key policy allows anyone in account 111 to decrypt or encrypt data using the key as long as the request came via S3.

A customer managed CMK is different. You create customer managed CMKs and control who may use them. Now you can control access to data for any specific use case. Create a key for the use case. Then configure the key's resource access policy for that use case's requirements. Finally encrypt data with the key.

Let's dive into the uniquely powerful world of key resource policy.

KMS Key Resource Policy

KMS key policies behave very differently than other resource policies even though they look the same.

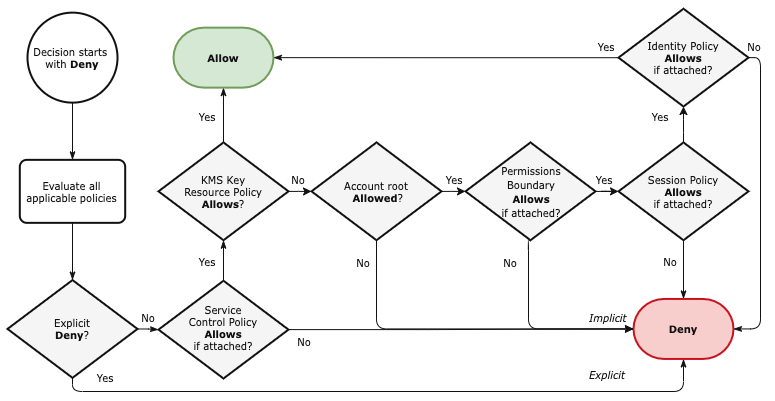

Figure 5.1 KMS Key Access Evaluation Logic

Figure 5.1 KMS Key Access Evaluation Logic

Every KMS key must have a key policy. Critically, that single key policy is the root of control for all access to the key. Key policy is the primary way to control access to a key and introduces an exception to the standard security policy evaluation flow.

⚠️ The account's identity policies are only integrated into the policy evaluation flow when the key policy allows access to the account's root user.

An account's root user cannot access a key unless explicitly allowed by the key policy. Consequently, the account's IAM principals can only access the key when explicitly granted by the key policy or access is granted to the root user and the account's identity policies grant access to the key.

This access evaluation behavior diverges from IAM's standard approach that allows access via either identity or resource policies. But it arguably makes implementing least privilege easier.

Let's examine the default key policy since there are many keys with this policy. The AWS console, CLI, SDKs, and CDK all generate a default key policy for customer managed CMKs. There are several variations of the default key policy, but they are effectively the same. The default key policies allow access to the root user and enable identity policies. They look like:

Example: Default Key Policy for a Customer Managed Key

{ "Version": "2012-10-17", "Id": "DefaultKeyPolicy", "Statement": [ { "Sid": "Allow Root User to Administer Key And Identity Policies", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::123456789012:root" }, "Action": "kms:*", "Resource": "*" } ]}The default key resource policy:

- explicitly allows the AWS account full access to administer and use the key

- implicitly allows use of the key by any IAM principals permitted by identity policies via the root user

- does not

Denyany IAM principals the ability to use the key

This is effectively the same level of access control as an AWS managed key. All the account's IAM principals with broad KMS permissions granted by identity policies can use the key.

Suppose you wanted to narrow this key's usage to only the appA IAM role and ci IAM user. You can do that in two ways:

- Grant access to only those principals in the key policy, but not root

- Grant access to root, but restrict the IAM principals that can use the key

Let's examine both approaches.

Grant access solely via key resource policy

First, you can grant access to only the ci and appA IAM principals in the key policy, omitting account root user access:

Example: Control access solely via key policy

{ "Version": "2012-10-17", "Id": "AllowAccessOnlyToSpecificIAMPrincipals", "Statement": [ { "Sid": "AllowFullAccess", "Effect": "Allow", "Action": "kms:*", "Resource": "*", "Principal": { "AWS": [ "arn:aws:iam::123456789012:user/ci" ] } }, { "Sid": "AllowReadAndWrite", "Effect": "Allow", "Action": [ "kms:Decrypt", "kms:Encrypt", ], "Resource": "*", "Principal": { "AWS": [ "arn:aws:iam::123456789012:role/appA" ] } } ]}Only the ci and appA principals will be able to use this key or delegate access to it. And they can only use the permissions allowed by the key policy. So only the ci user can manage this key and its access policy.

The account's root user does not have access. Consequently:

- the Identity policy system is not enabled, so Identity policies cannot allow additional access

- the root user cannot access the key, not even to change the key policy or manage the key's lifecycle

Finally, least privilege!

But perhaps this is too little privilege in practice. If the ci and appA principals are removed from IAM, the key will become unmanageable and data encrypted with this key inaccessible. You can file a ticket with AWS Support to restore access to the key. However, you will not have access to data encrypted with this key until access is restored. AWS' default key policy makes sense with this context.

But there is another path to least privilege that is more flexible.

Grant access to account and narrow via key resource policy

You can enable account root access and identity policies then use key resource policy to narrow access to specific IAM principals. Consider this NarrowerKeyPolicy:

Example: Narrow Key Policy

{ "Version": "2012-10-17", "Id": "NarrowerKeyPolicy", "Statement": [ { "Sid": "AllowFullAccess", "Effect": "Allow", "Action": "kms:*", "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:role/appA", "arn:aws:iam::123456789012:user/ci" ] } } }, { "Sid": "DenyEveryoneElse", "Effect": "Deny", "Action": "kms:*", "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnNotEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:root", "arn:aws:iam::123456789012:user/ci", "arn:aws:iam::123456789012:role/appA" ] }, "Bool": { "aws:PrincipalIsAWSService": "false", "kms:GrantIsForAWSResource": "false" } } } ]}The NarrowerKeyPolicy‘s AllowFullAccess statement permits full access by the appA and ci principals. This policy also denies access to all other principals. The DenyEveryoneElse statement closes off unintended access granted by overly-permissive Identity policies attached to principals in the account. The aws:PrincipalIsAWSService condition ensures the deny does not apply to AWS services like DynamoDB and S3 so they can use the key on your behalf. Similarly, the kms:GrantIsForAWSResource condition permits AWS services like RDS to maintain KMS key grants, a form of delegated access.

This policy takes a step towards least privilege. It is also more flexible, identifying principals with the Arn condition operators.

You may want to refine the policy further. The application's appA role probably does not need to administer the key. You could create a statement that only gives appA privileges to encrypt and decrypt data. You might also only allow ci to administer the key. That key policy could look like the one generated to protect the bucket in 'Control access to any resource'.

Consider the least privilege key policy that the k9 Security libraries for Terraform and CDK generate:

Example: Least Privilege Key Policy

{ "Version": "2012-10-17", "Id": "LeastPrivilegeKeyPolicy", "Statement": [ { "Sid": "AllowRestrictedAdministerResource", "Effect": "Allow", "Action": [ "kms:CreateAlias", "kms:CreateKey", "kms:ScheduleKeyDeletion",

# omit 17 Administration actions

], "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:user/person1", "arn:aws:iam::123456789012:user/ci" ] } } }, # omit Read Config statement

{ "Sid": "AllowRestrictedReadData", "Effect": "Allow", "Action": [ "kms:Decrypt", "kms:Verify", ], "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:user/person1", "arn:aws:iam::123456789012:role/appA" ] } } }, { "Sid": "AllowRestrictedWriteData", "Effect": "Allow", "Action": [ "kms:Encrypt", "kms:GenerateDataKey", "kms:Sign"

# omit 7 Write actions ], "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:user/person1", "arn:aws:iam::123456789012:role/appA" ] } } },

# omit unused Delete Data and Custom capability statements

{ "Sid": "DenyEveryoneElse", "Effect": "Deny", "Action": "kms:*", "Resource": "*", "Principal": { "AWS": "*" }, "Condition": { "ArnNotEquals": { "aws:PrincipalArn": [ "arn:aws:iam::123456789012:root", "arn:aws:iam::123456789012:user/person1", "arn:aws:iam::123456789012:user/ci", "arn:aws:iam::123456789012:role/appA" ] }, "Bool": { "aws:PrincipalIsAWSService": "false", "kms:GrantIsForAWSResource": "false" } } } ]}The LeastPrivilegeKeyPolicy allows ci to administer the key, appA to read and write data with the key, and person1 to administer, read, and write. The DenyEveryoneElse statement denies access to everyone but those principals and the root user. Appendix Least Privilege Key Policy contains the full 175-line policy, including actions and unused statements omitted for brevity.

Use the general form of the LeastPrivilegeKeyPolicy's Allow and Deny statements across all KMS keys and other resources that support policies.

- Allow access capabilities to explicitly-named IAM principals

- Deny everyone else, except for AWS Service principals and service-linked roles

Designing and implementing resource policies from scratch takes a lot of time, energy, and expertise. Reuse known-good solutions to ship quickly with confidence.

Now let’s leverage key policy to control access to multiple data stores.

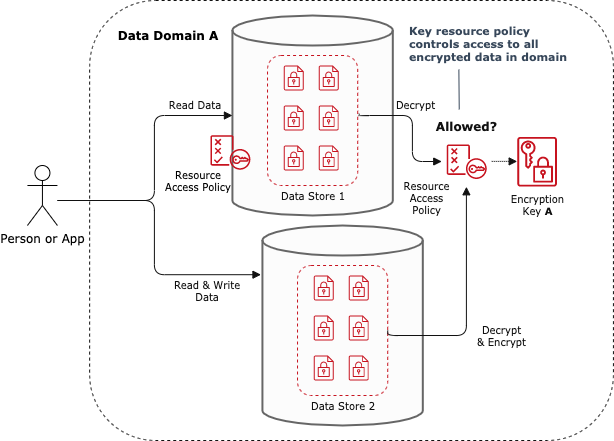

Pattern: Partition Data with Encryption

Here’s where access control gets exciting (no, really). You can think of KMS resource policy as a composable access control that is usable from any other AWS data service.

KMS is a composable, scalable, uniform mechanism for controlling access to data across data stores.

Once you learn how to use KMS resource policies effectively, you can use encryption keys and key resource policies as a uniform core access control mechanism for all AWS data services.

By managing access to a handful of critical encryption keys, you can worry less about the tens of data stores or hundreds (thousands?) of principals in your AWS account.

Leverage.

Figure 5.2 Control access to entire data domain with KMS

The Partition Data with Encryption pattern partitions and controls access to an entire data domain. This security architecture pattern encrypts that domain’s data with a dedicated customer managed KMS encryption key and applies a key resource access policy that is appropriate for the people and applications in that domain. A data domain encompasses the data managed by a single service or collaborating set of microservices such as ‘credit-applications’ or ‘orders’ (c.f. Bounded Context). This pattern adds resource access policy capabilities into data services without native support for resource policies such as Elastic Block Store and Relational Database Service.

This pattern helps you:

- Manage data access uniformly across disparate data services

- Simplify architecture, development, and audit processes with a consistent access control strategy

- Reduce access policy language knowledge and implementation requirements

- Explicitly control access to data even when the AWS data service does not support resource policies

How it works

In order for a person or application to read and write data to an AWS data store, their IAM principal must also have access to use the KMS key needed for those operations. This makes customer managed encryption keys a convenient point of access control.

For example, when an IAM user reads encrypted data from S3 using the s3:GetObject API, the user also needs permission to call kms:Decrypt with the key used to encrypt the data. In this scenario, the S3 service invokes kms:Decrypt on the IAM user’s behalf. If the user has permission to call kms:Decrypt with the required key, then the s3:GetObject operation can proceed. Without decrypt permissions for the key, s3:GetObject will fail.

The KMS API has a small set of actions relevant to reading and writing data via another service:

- Read data:

Decrypt - Write data:

Encrypt,GenerateDataKey,GenerateDataKeyPair,GenerateDataKeyPairWithoutPlaintext,GenerateDataKeyWithoutPlaintext,ReEncryptFrom,ReEncryptTo

You can control access to an entire data domain by encrypting that data with a dedicated CMK and applying a key policy that allows intended access to the key’s read, write, and administration operations.

The Partition Data with Encryption pattern helps you scalably control and audit access to each domain's data with a resource boundary. The key resource policy clearly identifies who and what kind of access is allowed to the domain’s data. This key policy is better positioned to prevent unauthorized access than getting every related data service’s resource policy and every identity policy correct. Identity policies attached to IAM principals can be treated as controls that enable authorized access to services rather than prevent unauthorized access to data.

Hopefully you're convinced resource policies provide a warm, weighted security blanket for your data. But those policies are complicated and are not going to write themselves. Let's deal with that.

Simplify and scale IAM configuration with usable automation

Leverage infrastructure code to elevate your AWS security posture.

Security policies are often complex and nuanced, but perhaps no more so than other Cloud resources such as databases and compute clusters. We don't want to think through the details of any of these on every change.

That's why it's useful to package expert knowledge into reusable libraries and tools that:

- provide a simple interface that non-experts understand

- generate great security policies

- integrate well with your delivery process

- unload Cloud security specialists and accelerate delivery

Writing secure IAM policies is an exacting, tedious practice. Everything needs to be just so. But we've already established a general form for secure policies, even least privilege ones. A lot of them look the same.

We can generate sophisticated security policies with automation. But only if that automation is usable by the engineers that need to use it.

Usability is necessary to scale security.

So what does a usable policy generator interface look like? (examples in a moment)

First, specify who the user of this generator is.

The target user is every engineer in your org who will likely need to secure a resource within their control. Yes, explicitly target non-experts in AWS cloud security.

These generators need to work for regular application, cloud, site reliability, and security engineers, not only cloud security specialists.

Enable these engineers to create great security policies, so cloud security specialists can focus on other things like architecting and creating secure building blocks like secure policy generators. Empowering non-experts with safe tools and effective training is the most effective way to scale security when using automated delivery.

Second, simplify the interface to AWS IAM.

Application engineers and collaborating colleagues like SREs usually have the best knowledge of who needs access to data and which service API capabilities their application needs. But people are easily overwhelmed by the tens or hundreds of API actions in each relevant AWS service.

8 hours of research to create a policy or 2 hours to review isn't going to work. Access management needs to make sense and be pretty darn secure right away.

Engineers need to be able to read and write the intended access capability in plain language, quickly and safely.

Simplify defining intended access so engineers can choose from a small set of consistent categories non-experts understand such as:

- administer resource

- read config

- read data

- write data

- delete data

Use no more than seven categories. This enables users to choose the appropriate categories using their imprecise knowledge1.

Some examples of simplified IAM access models are:

- AWS Access Levels: List, Read, Write, Permissions Management, Tagging

- k9 Security Access Capabilities: administer-resource, read-config, read-data, write-data, delete-data, use-resource

These are the types of categories non-experts should use to declare their intended access to AWS APIs and specific resources.

With a simplified access model, engineers can declare intended access accurately and quickly.

Let's see what this looks like as a standalone tool and a composable infrastructure code library.

Standalone tool for usable policy generation: Policy Sentry

Policy Sentry (by Salesforce) is a great example of a standalone tool that simplifies least privilege identity policy generation. Engineers specify intended access in a configuration file using the AWS Access Level model. This configuration declares an identity policy granting access to secrets in AWS Systems Manager and Secrets Manager:

mode: crudread:- 'arn:aws:ssm:us-east-1:123456789012:parameter/myparameter'write:- 'arn:aws:ssm:us-east-1:123456789012:parameter/myparameter'list:- 'arn:aws:ssm:us-east-1:123456789012:parameter/myparameter'tagging:- 'arn:aws:secretsmanager:us-east-1:123456789012:secret:mysecret'permissions-management:- 'arn:aws:secretsmanager:us-east-1:123456789012:secret:mysecret'When you run policy_sentry, it generates a policy like:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "SsmReadParameter", "Effect": "Allow", "Action": [ "ssm:GetParameter", "ssm:GetParameterHistory", "ssm:GetParameters", "ssm:GetParametersByPath", "ssm:ListTagsForResource" ], "Resource": [ "arn:aws:ssm:us-east-1:123456789012:parameter/myparameter" ] }, { "Sid": "SsmWriteParameter", "Effect": "Allow", "Action": [ "ssm:DeleteParameter", "ssm:DeleteParameters", "ssm:LabelParameterVersion", "ssm:PutParameter" ], "Resource": [ "arn:aws:ssm:us-east-1:123456789012:parameter/myparameter" ] }, { "Sid": "SecretsmanagerPermissionsmanagementSecret", "Effect": "Allow", "Action": [ "secretsmanager:DeleteResourcePolicy", "secretsmanager:PutResourcePolicy" ], "Resource": [ "arn:aws:secretsmanager:us-east-1:123456789012:secret:mysecret" ] }, { "Sid": "SecretsmanagerTaggingSecret", "Effect": "Allow", "Action": [ "secretsmanager:TagResource", "secretsmanager:UntagResource" ], "Resource": [ "arn:aws:secretsmanager:us-east-1:123456789012:secret:mysecret" ] } ]}Then you can take that policy and apply it to the right identity via the console or some automation.

policy_sentry is a very useful tool, and organizations can adopt it regardless of which delivery technologies they use. However its capabilities are not natively accessible from common automated infrastructure delivery processes. So adopters need to integrate the tool and generated policies into their delivery workflows.

Alternatively, infrastructure code libraries can provide a simplified interface.

Integrated infra code library for usable policy generation: k9 Terraform for KMS

Infrastructure code libraries can provide simplified, declarative access control interfaces. When you integrate libraries that generate secure policies into automated delivery processes:

- engineers work within tools they already know

- pipelines deploy secure policies continuously

You'll need libraries that integrate with your supported infrastructure automation technologies. But the simplification can be profound.

Consider this Terraform configuration which generates a KMS Key resource policy:

# Define which principals may access the key and what capabilities they should havelocals { administrator_arns = [ "arn:aws:iam::123456789012:user/ci" , "arn:aws:iam::123456789012:user/person1" ]

read_config_arns = concat(local.administrator_arns, [ "arn:aws:iam::123456789012:role/auditor" ])

read_data_arns = [ "arn:aws:iam::123456789012:user/person1", "arn:aws:iam::123456789012:role/appA", ] write_data_arns = local.read_data_arns}

module "least_privilege_key_resource_policy" { source = "k9securityio/kms-key/aws//k9policy"

allow_administer_resource_arns = local.administrator_arns allow_read_config_arns = local.read_config_arns allow_read_data_arns = local.read_data_arns allow_write_data_arns = local.write_data_arns}Engineers already comfortable with Terraform can write that easily with information they already have. This is the interface to the k9 Security Terraform library for KMS keys. The library generates a policy stronger than most Cloud security specialists would write, but everyone can actually read the definition and learn from the result (full policy).

Integrate with your delivery process

Before selecting a tool, think about the delivery process.

Who should be writing and reviewing policies? Those are your users.

What are those people's existing delivery tools? How are those tools evolving? These are the technologies the 'simplifying tool' needs to work with. Consider whether an access simplifying tool written in Python is going to be well-adopted by a Java application team. How will you manage upgrades? Distribution matters.

When does policy authoring and review occur? These are the moments within the development and delivery process that the tool really needs to work. Effort should be spent on managing access, not shuffling files around.

Congratulations

Once you simplify how you're going to use AWS IAM, you've completed a major step. Choosing a few core security patterns and components lets you:

- enable engineers to use the knowledge they already have in their head

- focus and build expertise in a few techniques and tools instead of trying to learn IAM minutia

- productize your selected techniques so your whole organization can use them

- train your team on those techniques so your organization uses them effectively

- gain a lot of experience across your organization using a few techniques so that people can help each other instead of relying on security specialists

These simplifications help engineers cross Norman's Gulf of Execution. Now engineers can now easily figure out how to use IAM. We'll cross the Gulf of Evaluation using a similar approach in the next chapter.

With execution of AWS security controls simplified, you can sleep much better tonight.

- Norman, Donald A.. The Design of Everyday Things (pp. 75-76). Basic Books. Kindle Edition.↩